AI

AI

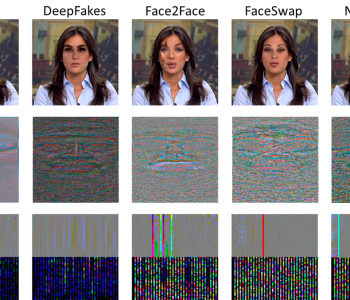

Can data poisoning thwart face recognition systems?

Application of data poisoning for ML face recognition systems. Interesting approach. Could this be practically scaled for seamless use by everyone?

Fawkes isn’t intended to keep a facial recognition system like Facebook’s from recognizing someone in a single photo. It’s trying to more broadly corrupt facial recognition systems, performing an algorithmic attack called data poisoning. The researchers said that, ideally, people would start cloaking all the images they uploaded. That would mean a company like Clearview that scrapes those photos wouldn’t be able to create a functioning database, because an unidentified photo of you from the real world wouldn’t match the template of you that Clearview would have built over time from your online photos.

https://www.nytimes.com/2020/08/03/technology/fawkes-tool-protects-photos-from-facial-recognition.html

However, is it already too late to do via technical means and only legislation can regulate this?

But Clearview’s chief executive, Hoan Ton-That, ran a version of my Facebook experiment on the Clearview app and said the technology did not interfere with his system. In fact, he said, his company could use images cloaked by Fawkes to improve its ability to make sense of altered images. “There are billions of unmodified photos on the internet, all on different domain names,” Mr. Ton-That said. “In practice, it’s almost certainly too late to perfect a technology like Fawkes and deploy it at scale.”

https://www.nytimes.com/2020/08/03/technology/fawkes-tool-protects-photos-from-facial-recognition.html